Longer Works

Works that took at least a couple of weeks to complete

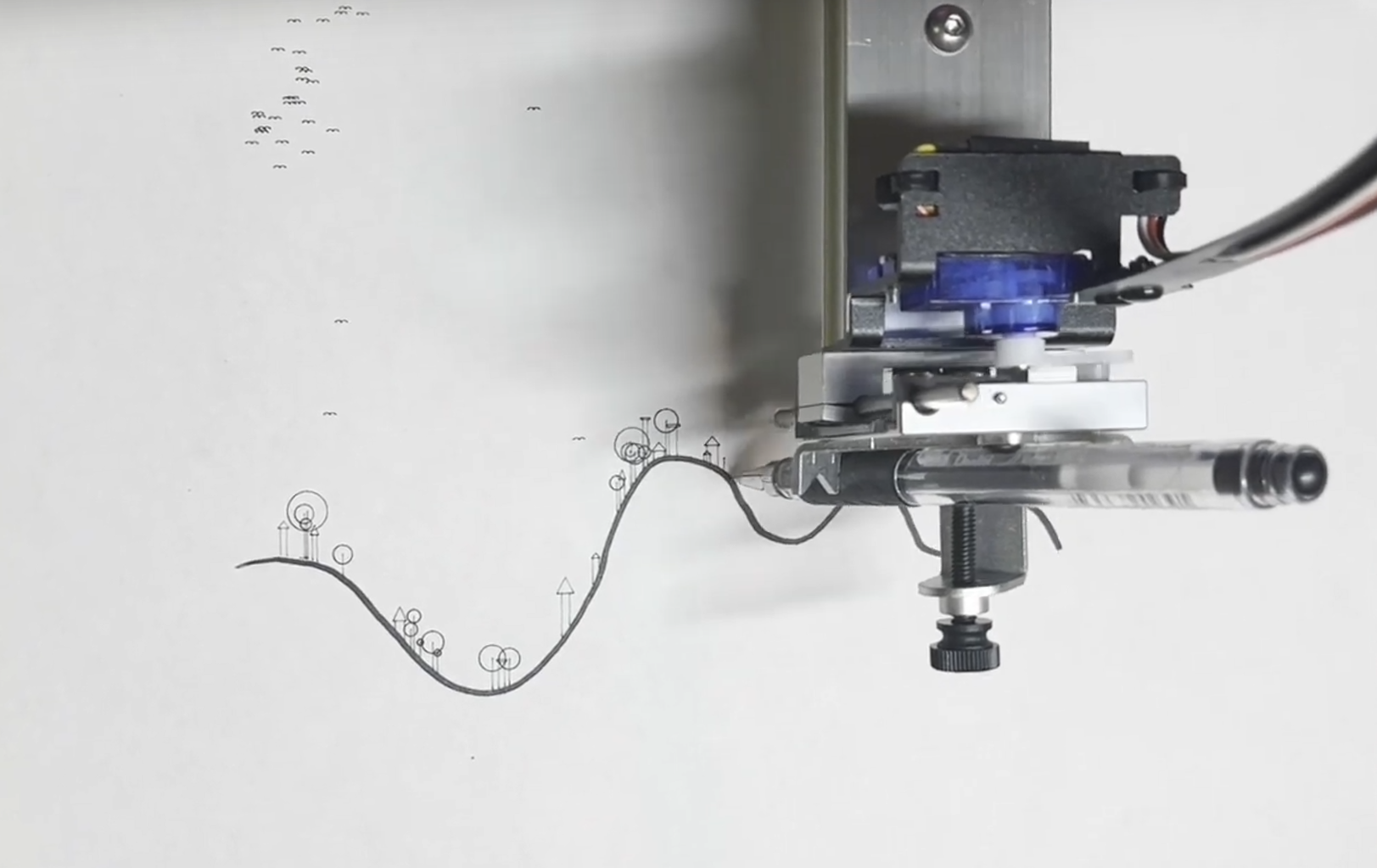

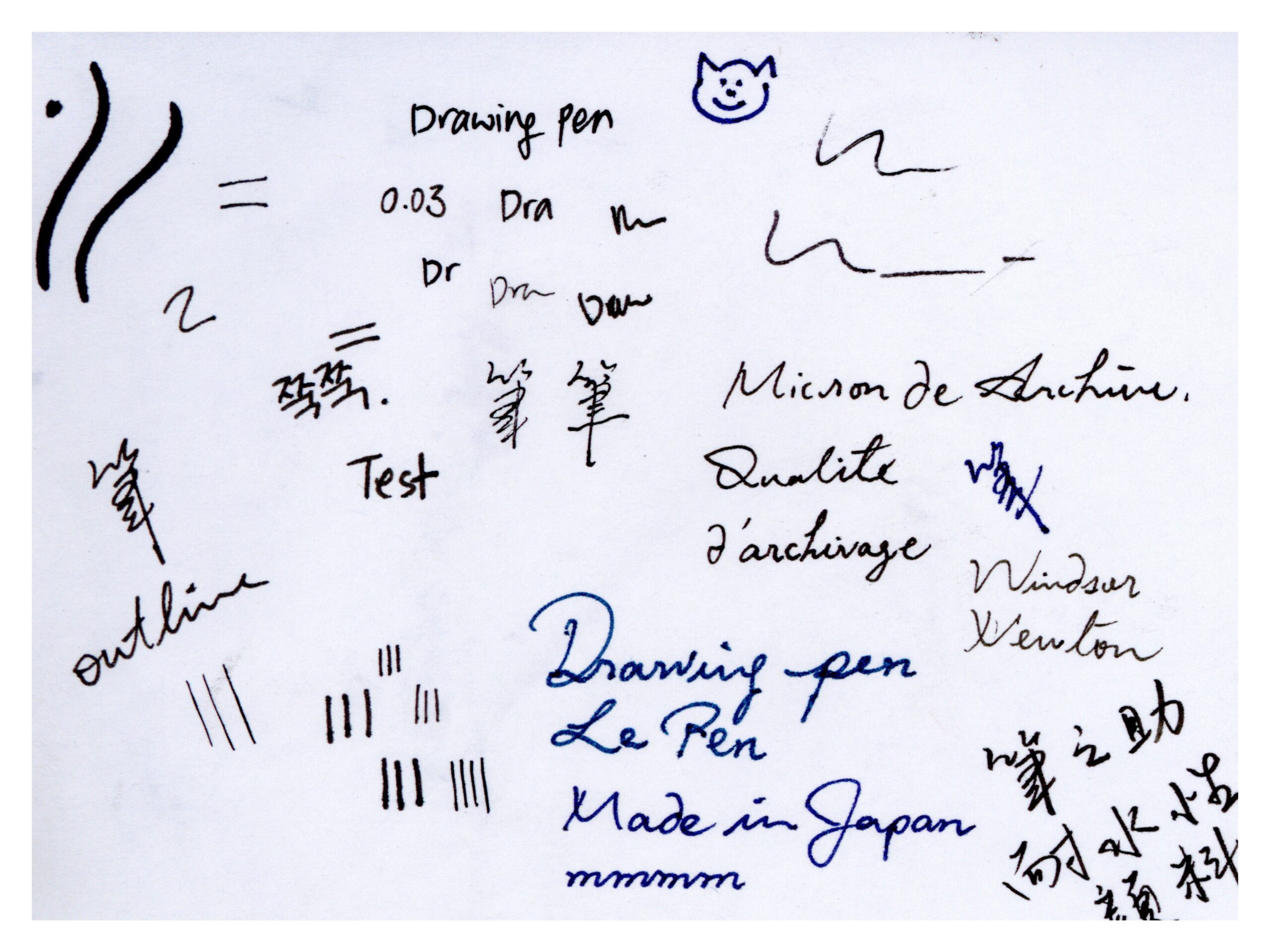

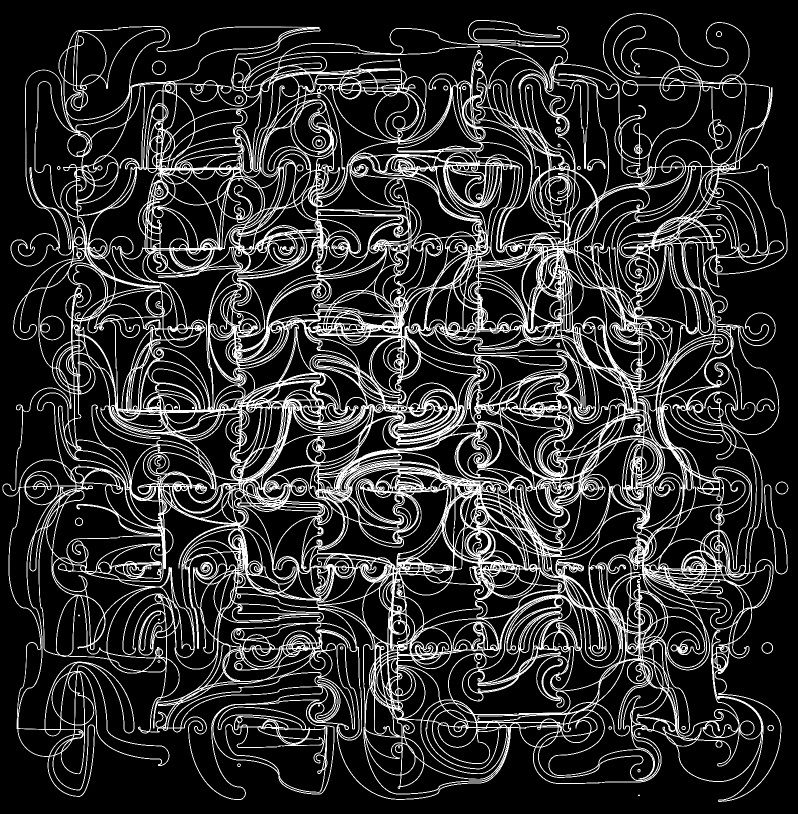

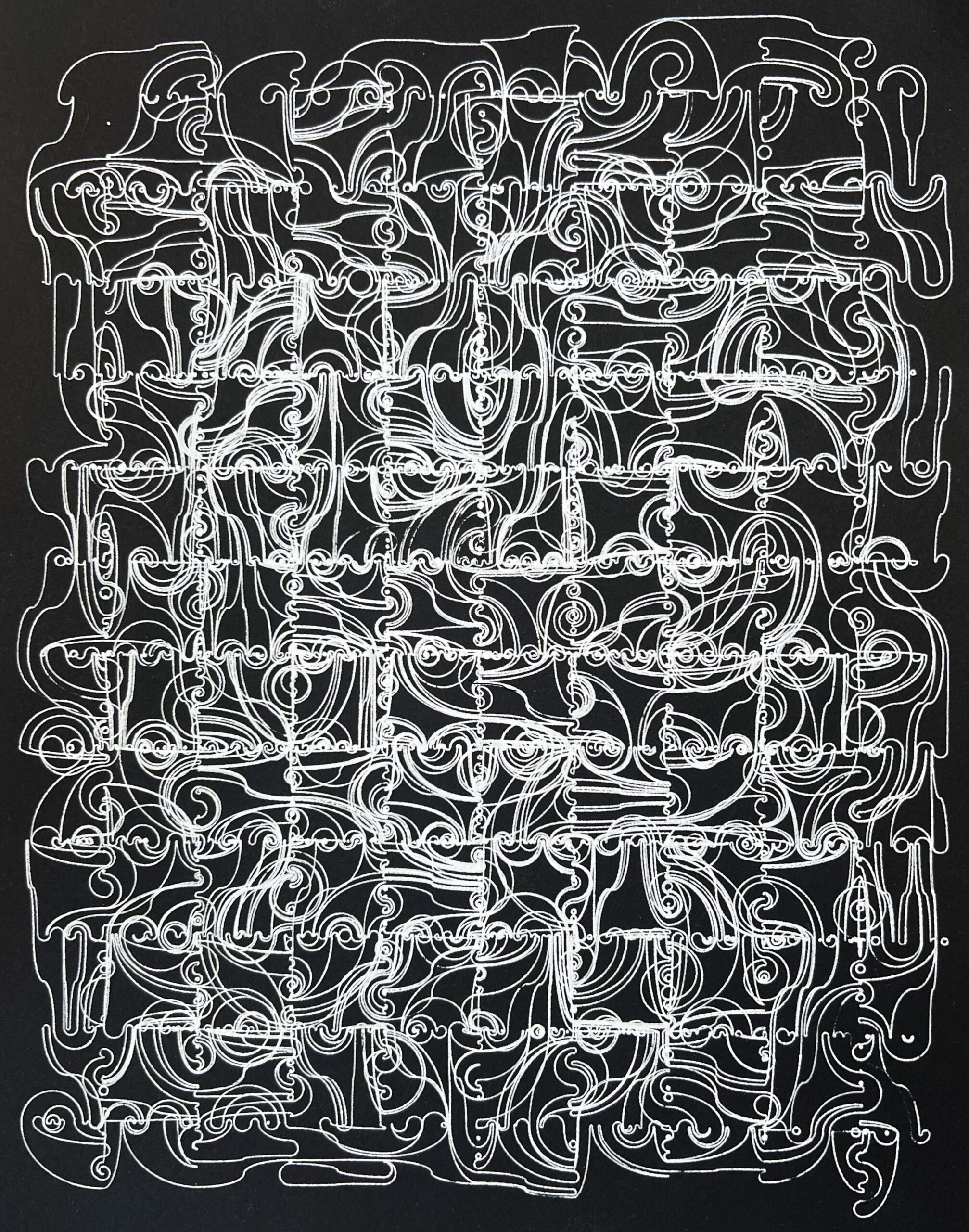

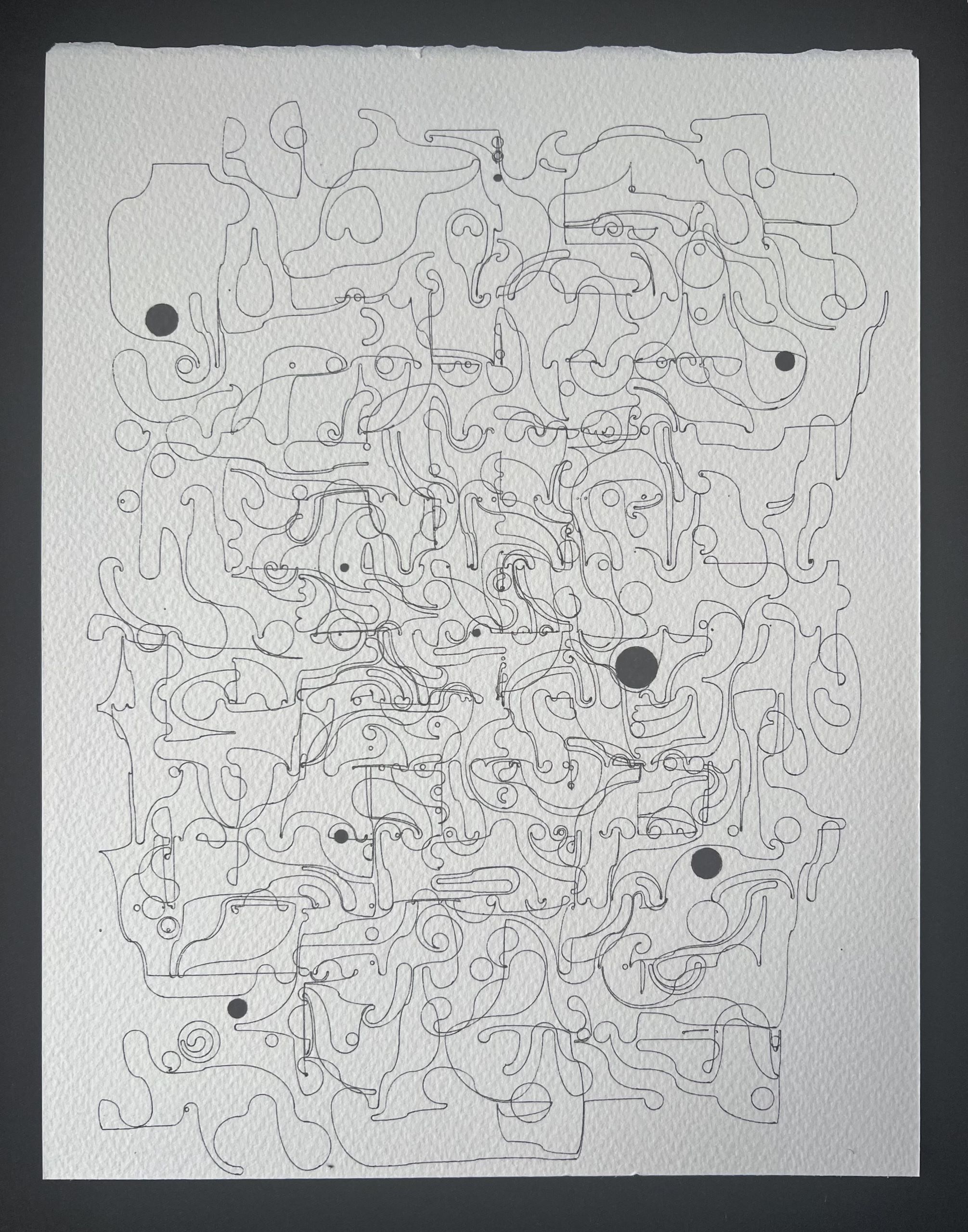

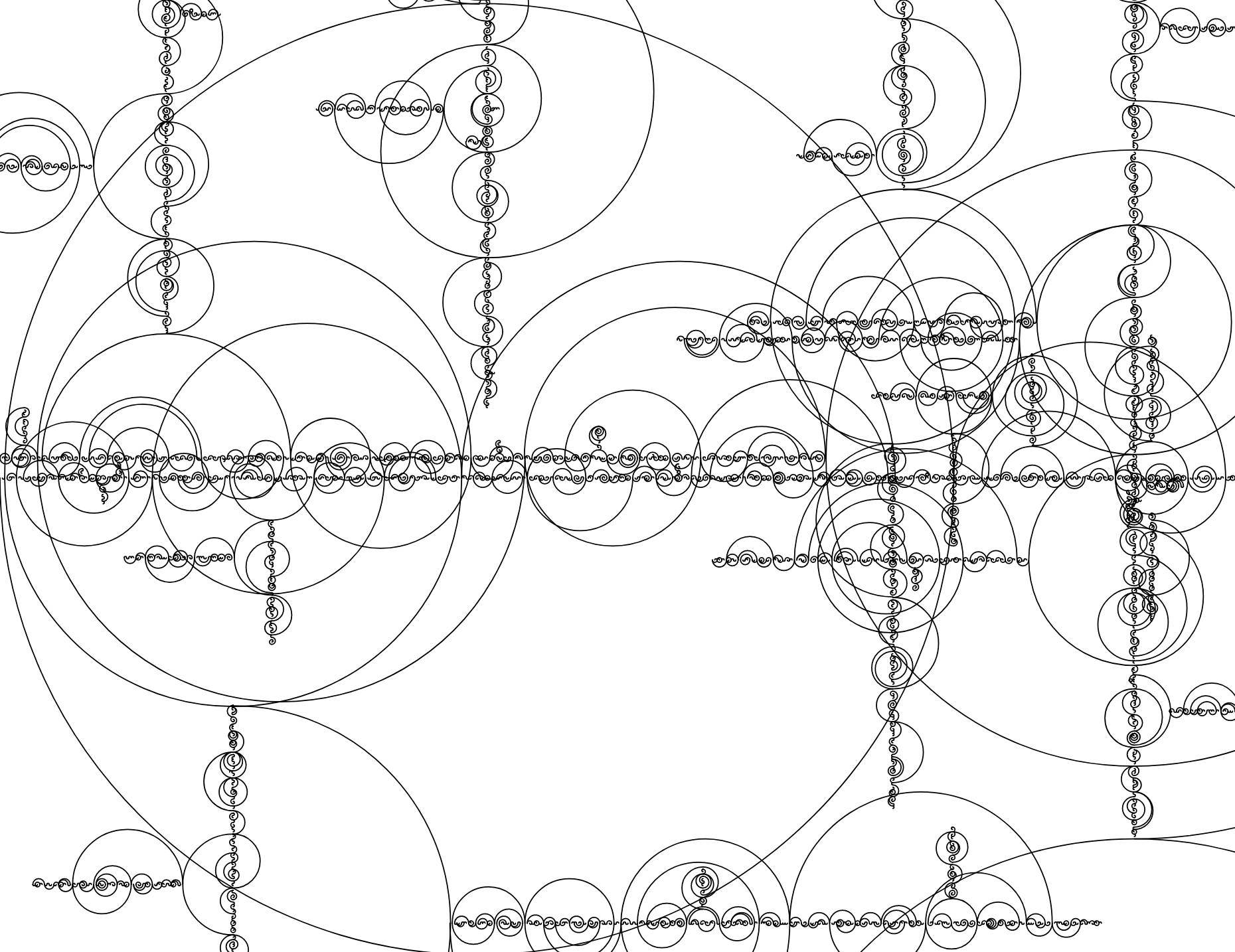

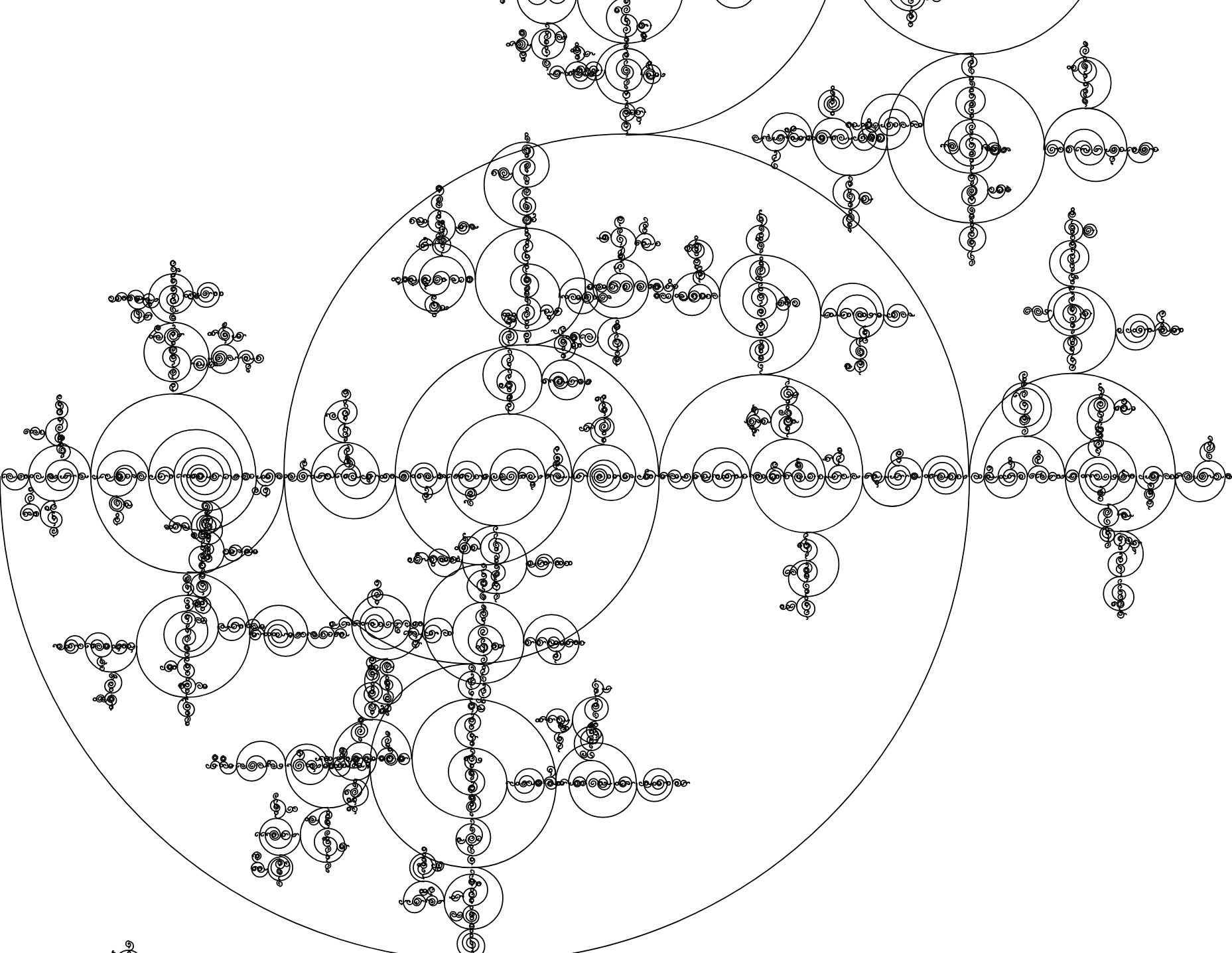

Pen and paper drawings created through the collaboration of human and machine

Generative asemic (without meaning) script mimicking the structures and style of handwriting

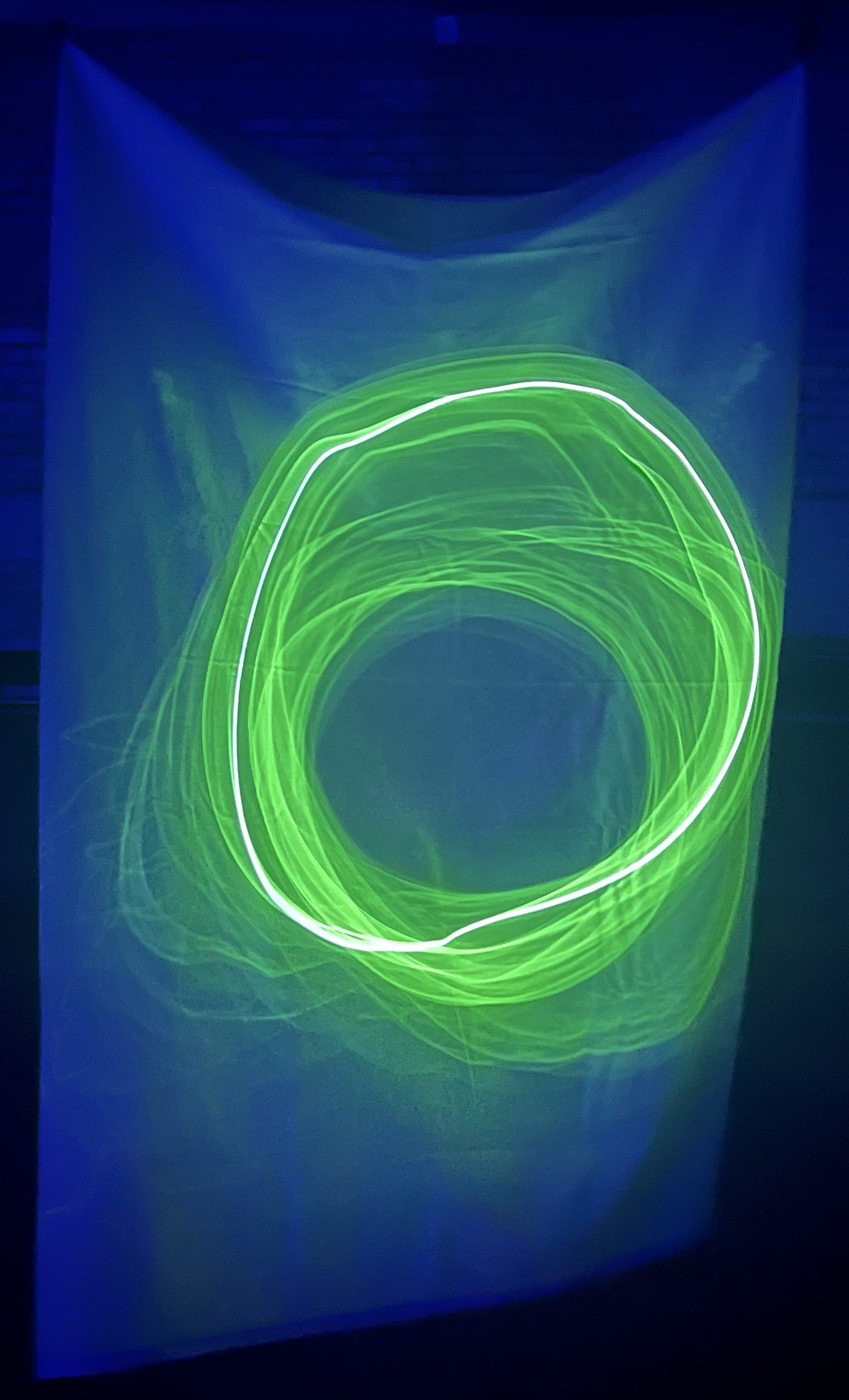

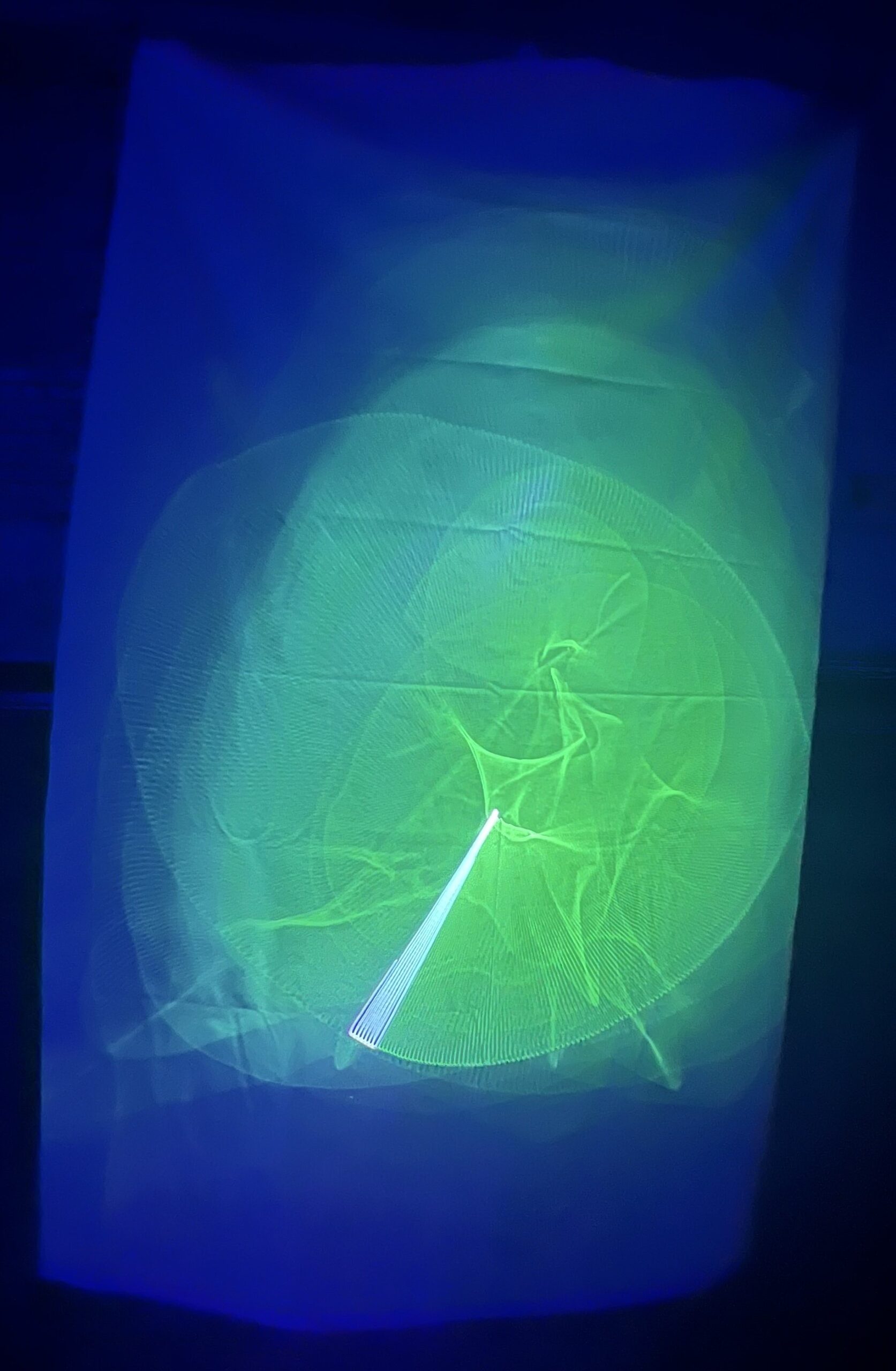

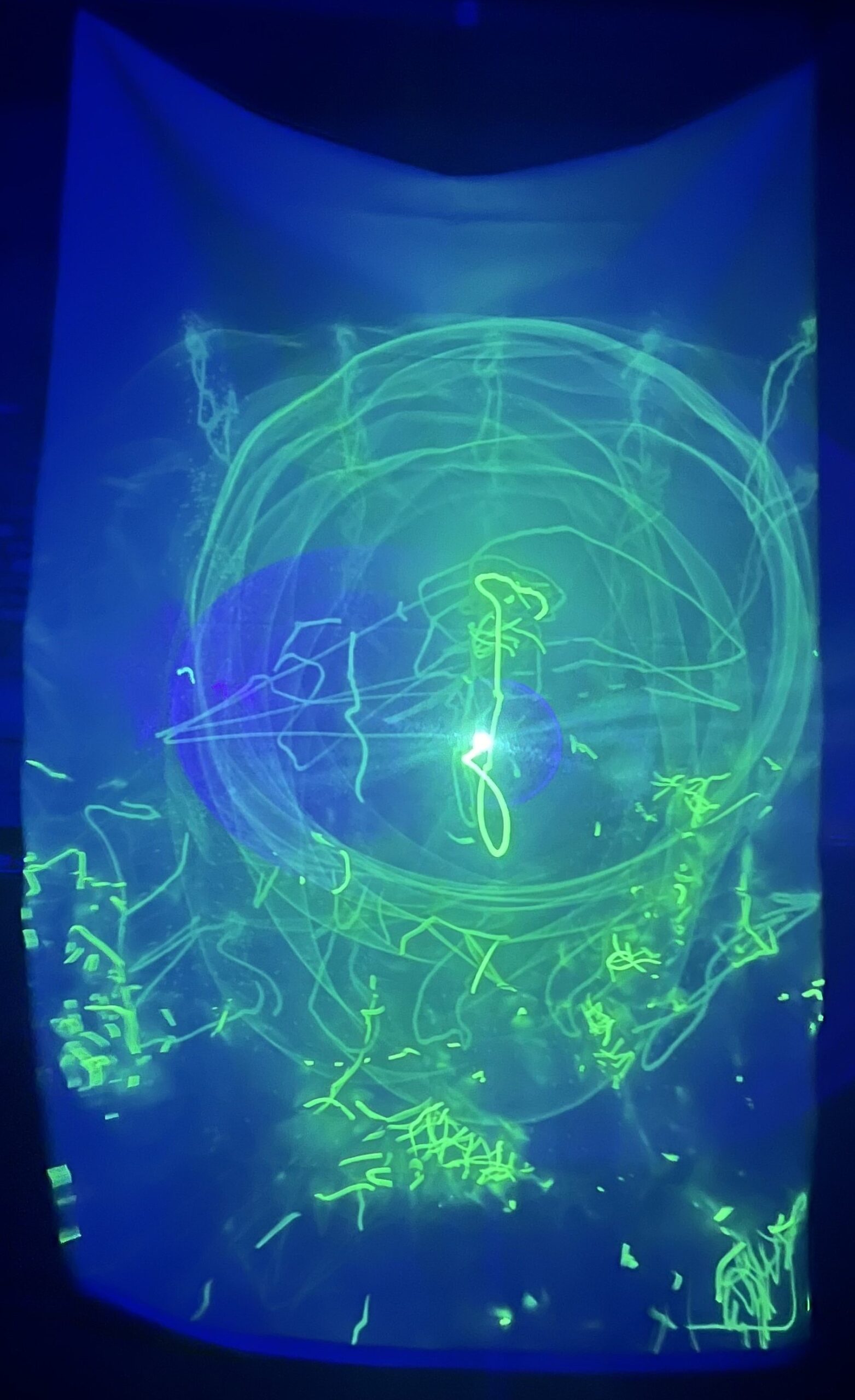

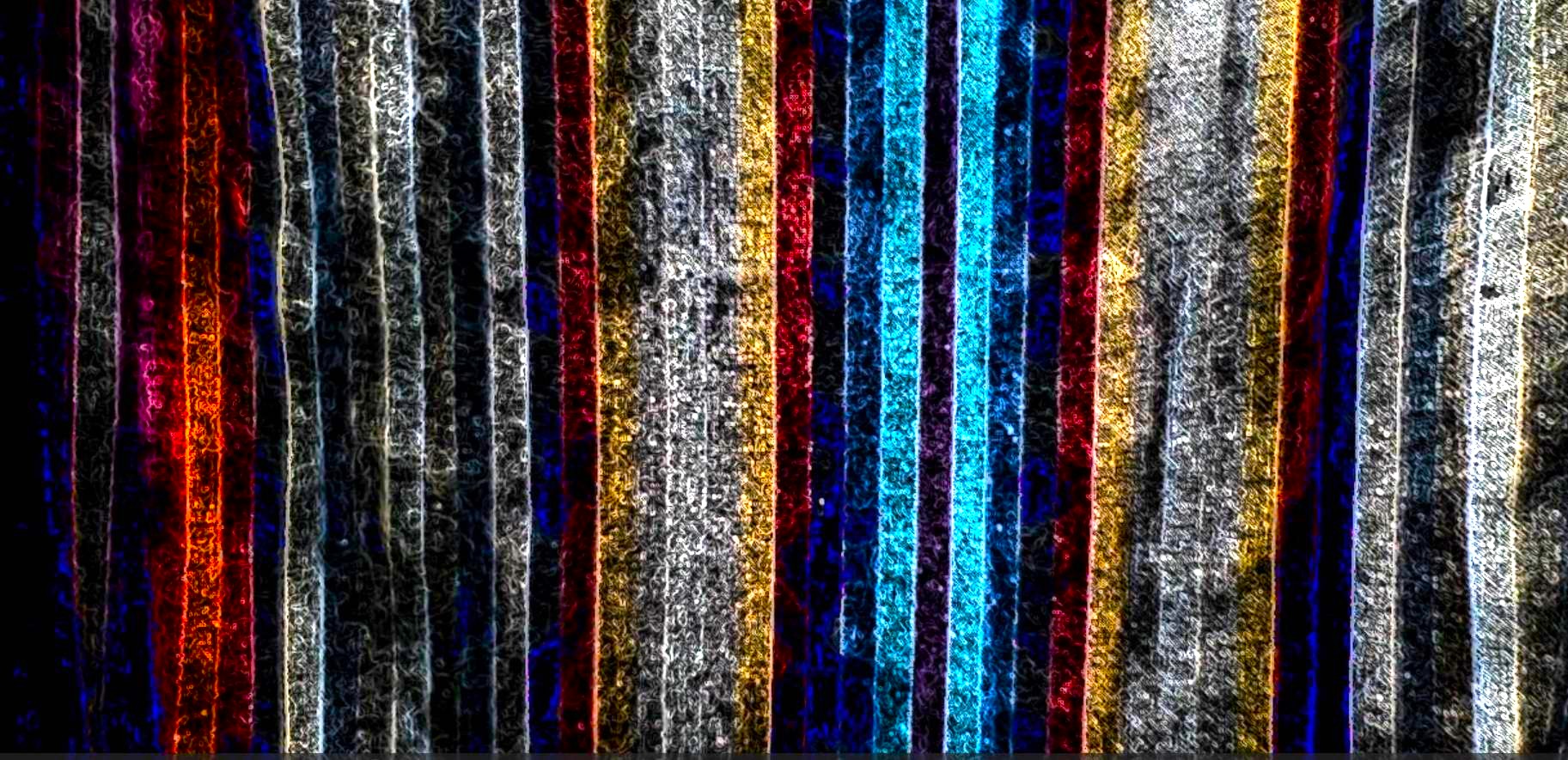

Lightsail

September 2024 - November 2024

Shortwave laser, Galvonometer, TouchDesigner, Phosphorescent Fabric, Fan

Inspired by Haans Haacke’s Blue Sail, this piece is a translation of the gusting irregularity of wind over time. Lightsail uses a custom-built galvanometer to project a shortwave near-ultraviolet laser onto a phosphorescent sail fluttering in the wind of a fan. Controlled by programs I designed in TouchDesigner, the galvanometer rapidly moves the point of the laser, resulting in the illusion of continuous shapes. The phosphorescent sail is excited by the high energy light of the laser, causing it to glow wherever it is struck. This results in the sail fleetingly capturing a record of its own shifting motion as the wind changes the relative positions of the laser’s point and the sail.

Lightsail installed at local Pittsburgh art show Tech25

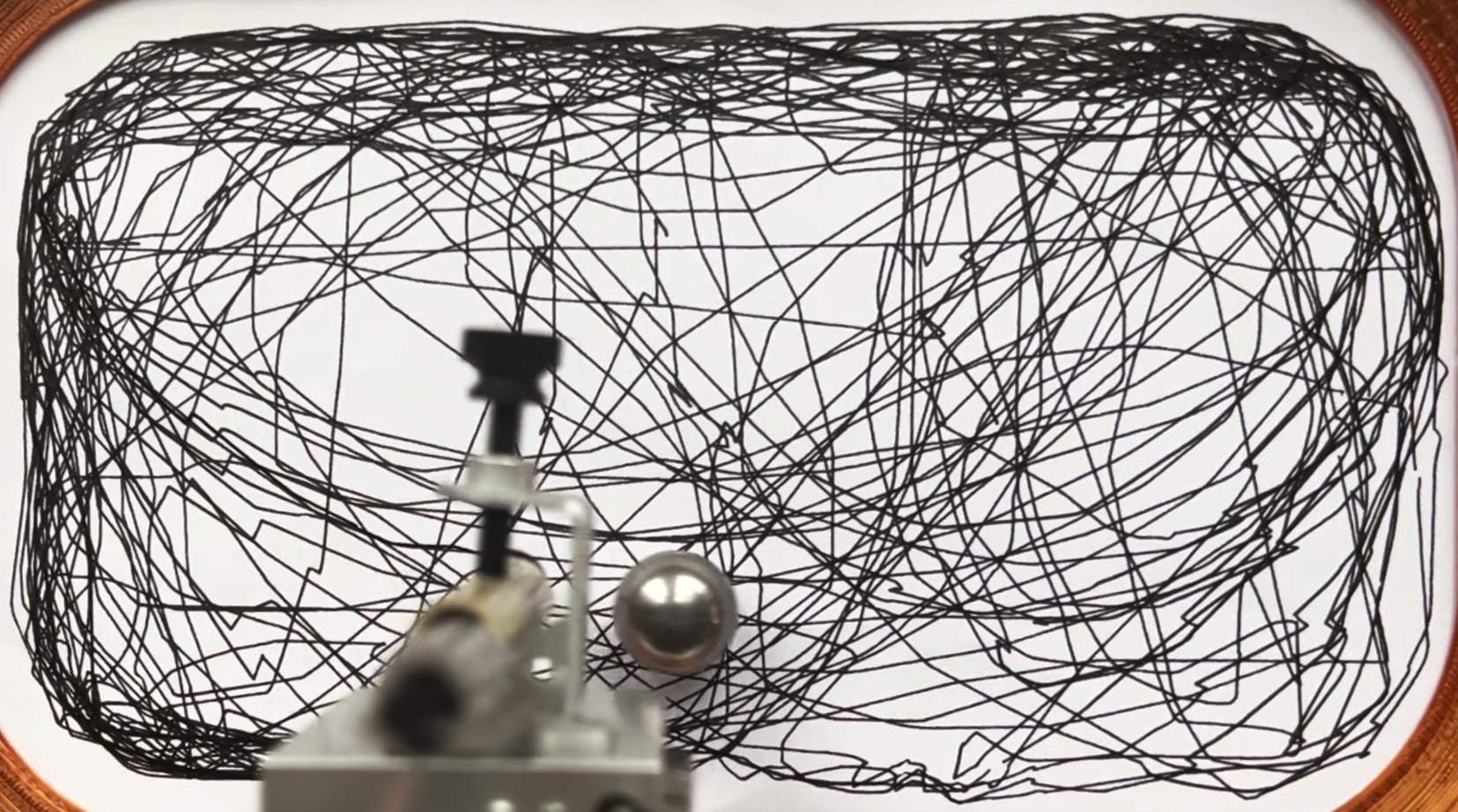

Drawings created as a byproduct of an endless chase across the page

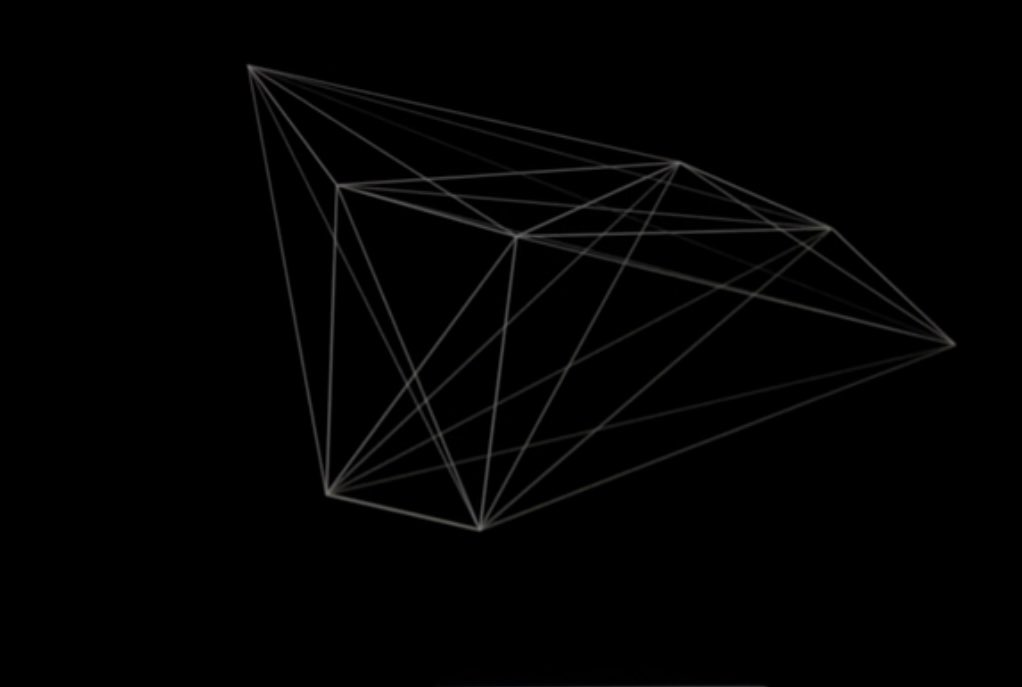

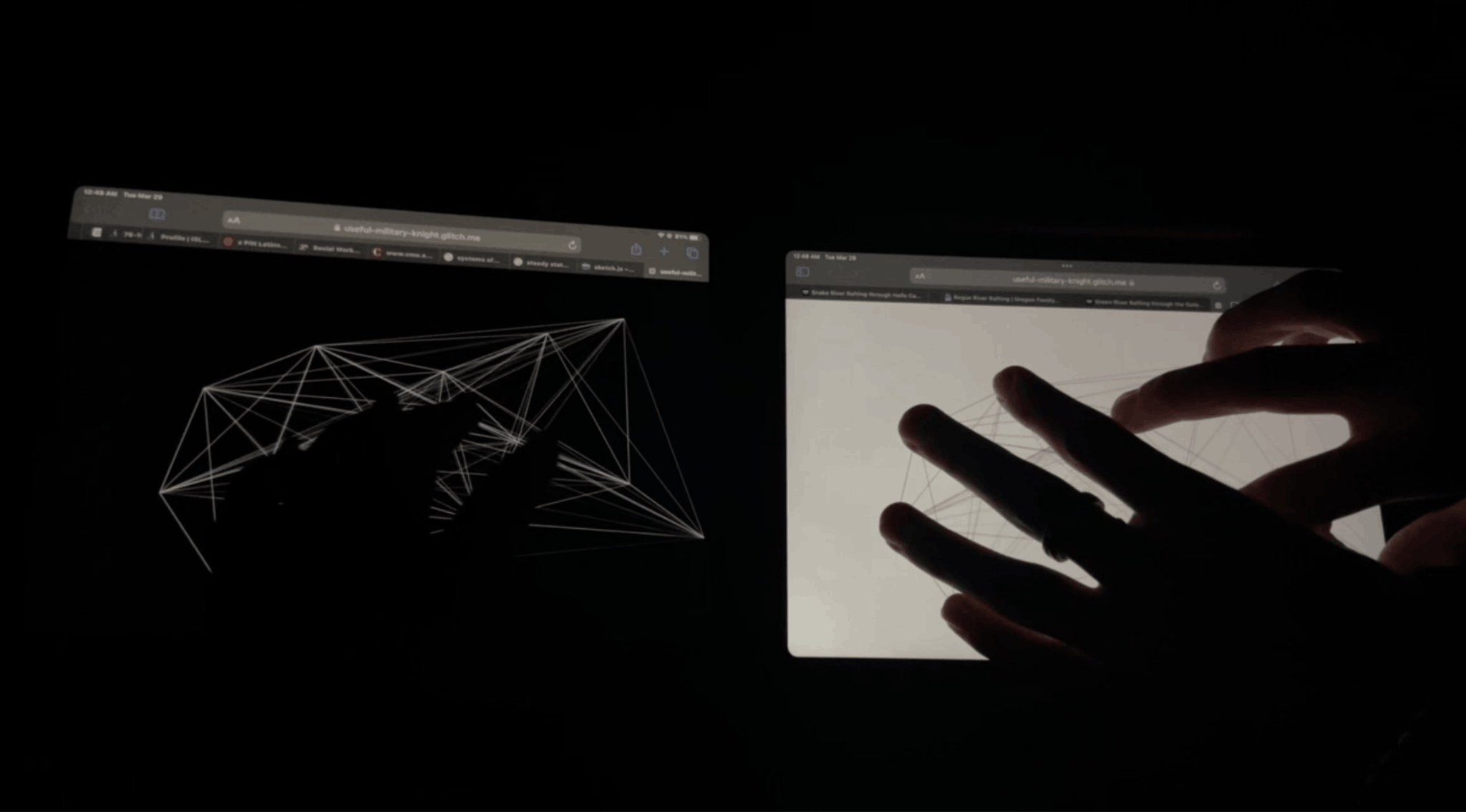

What We Share

March 2022 - April 2022

Glitch

What We Share allows users to feel a sense of connection by physically touching the same virtual space. Linking fingers through the Share interface creates a sense of closeness with others, yet the impassible barrier of the screen remains.

Inspired by my time during quarantine, What We Share brings attention to the components of human connection that are preserved and lost through virtual space. Awareness of what is missing brings users’ attention to all that human connection can include. What We Share uses a web server hosted through Glitch.com that tracks the multitouch points from all current users. I capture this information and generate a web of attenuated connection between all touchpoints.

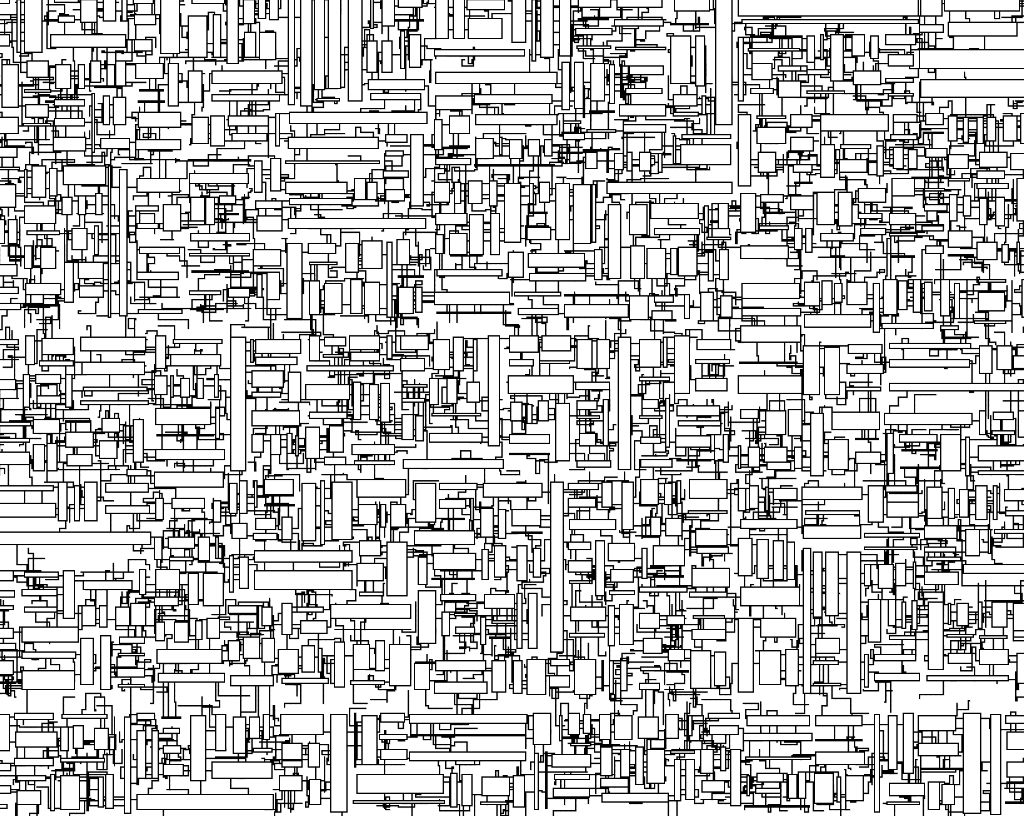

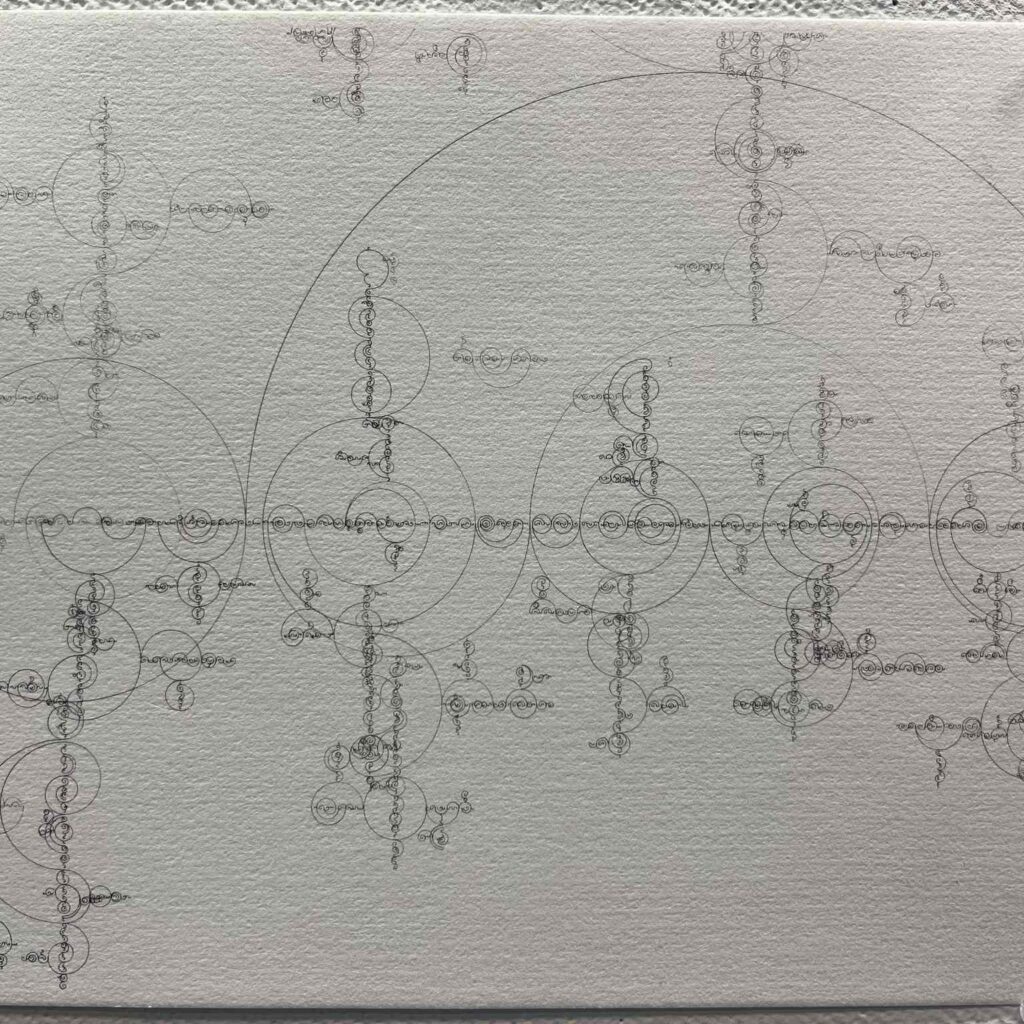

Pen test paper collected weekly from a Blick art store

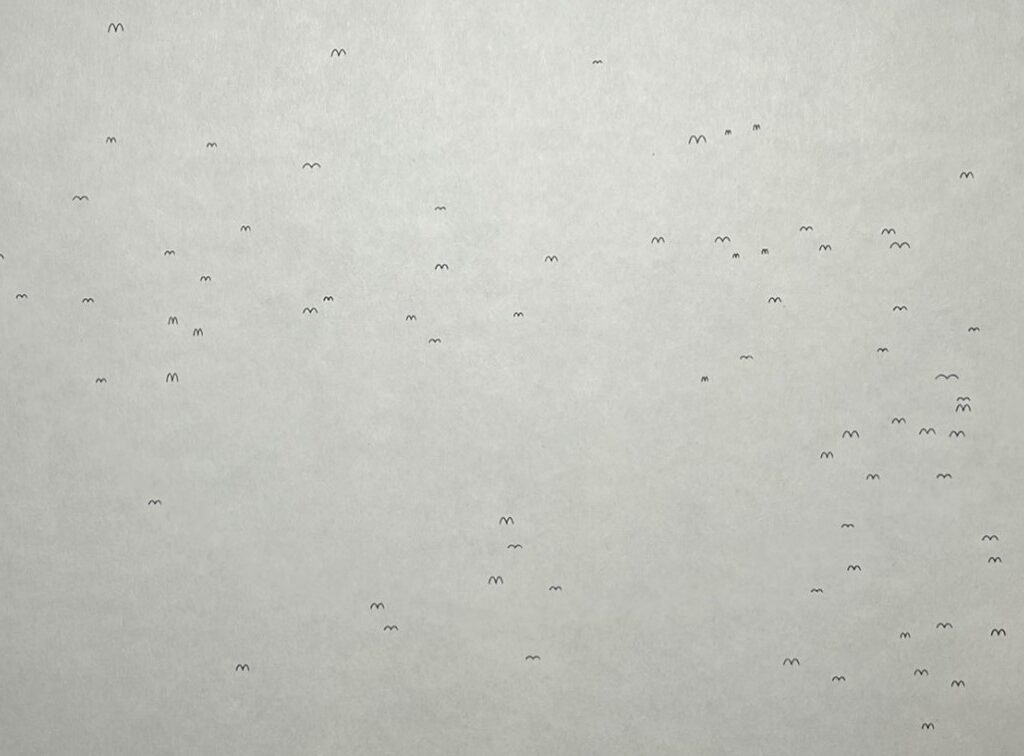

Flock Finder

October 2024 - November 2024

Axidraw, Webcam, YOLO8, OpenCV

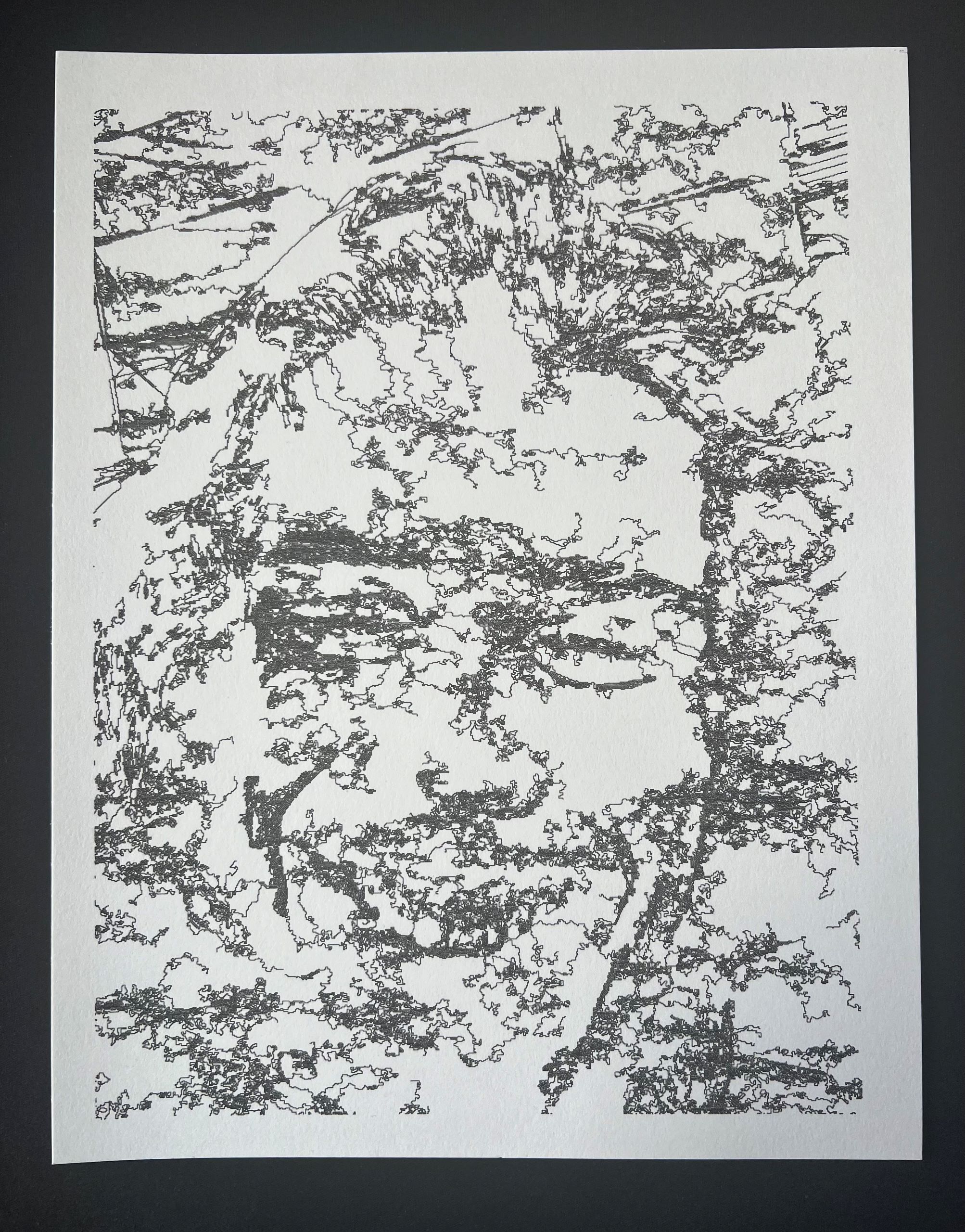

In real time, Flock Finder isolates birds from their environment and captures the motion of a flock over time on a single sheet of paper. In doing so, the environment is reintroduced from the perspective of birds. A line of birds represents the peaked roof of a building. That blank spot is a noisy intersection. That spiral of birds is a thermal. Flock Finder uses birds as a sensor to remap space.

I used YOLO8 object categorization to do real-time bird detection from a high-resolution webcam pointed out of my window. When a bird is detected, the coordinates and proximity of the bird is sent to my Axidraw pen plotter which draws an abstracted bird at the appropriate position and scale on my paper.

Find here a typology of birds-from-my-window created by allowing Flock Finder to run continuously for 15 days while replacing the paper each day.

Open Processing Selection

January 2022 - Ongoing

p5.js

Jeremiah

November 2023

p5.js

Jeremiah is a procedurally animated creature that simulates the behavior of predatory insects. I used the uncannily natural feel of live-calculated motion combined with sharp starts and stops to give the sense of a predator restrained in a cage.

TDF3s

November 2023 - December 2023

Touch designer

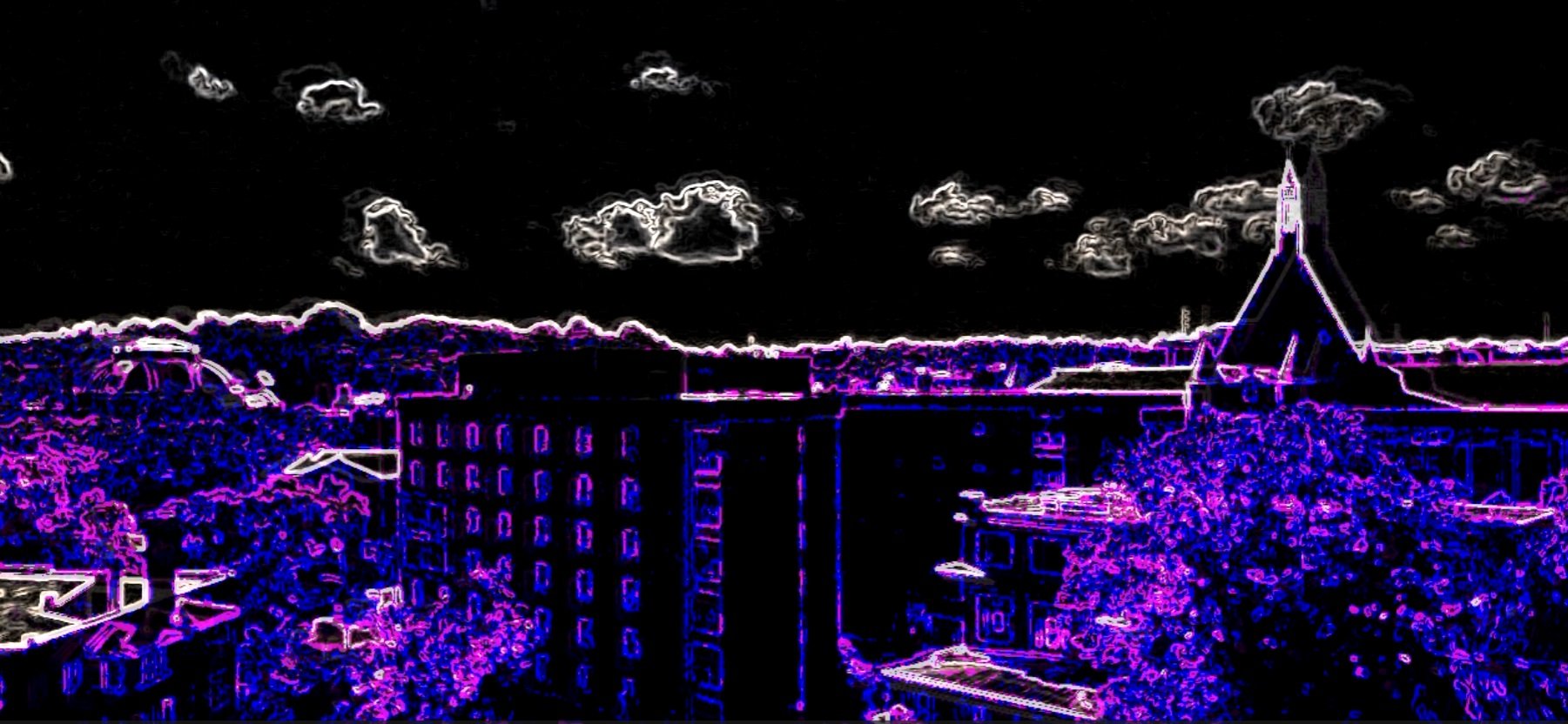

This piece uses Touch Designer to create a real-time generative video that reacts to the type of music it picks up in the background. The program is sensitive to the amount and tone of light it receives and reactively changes the thresholds of its line detection algorithm accordingly.

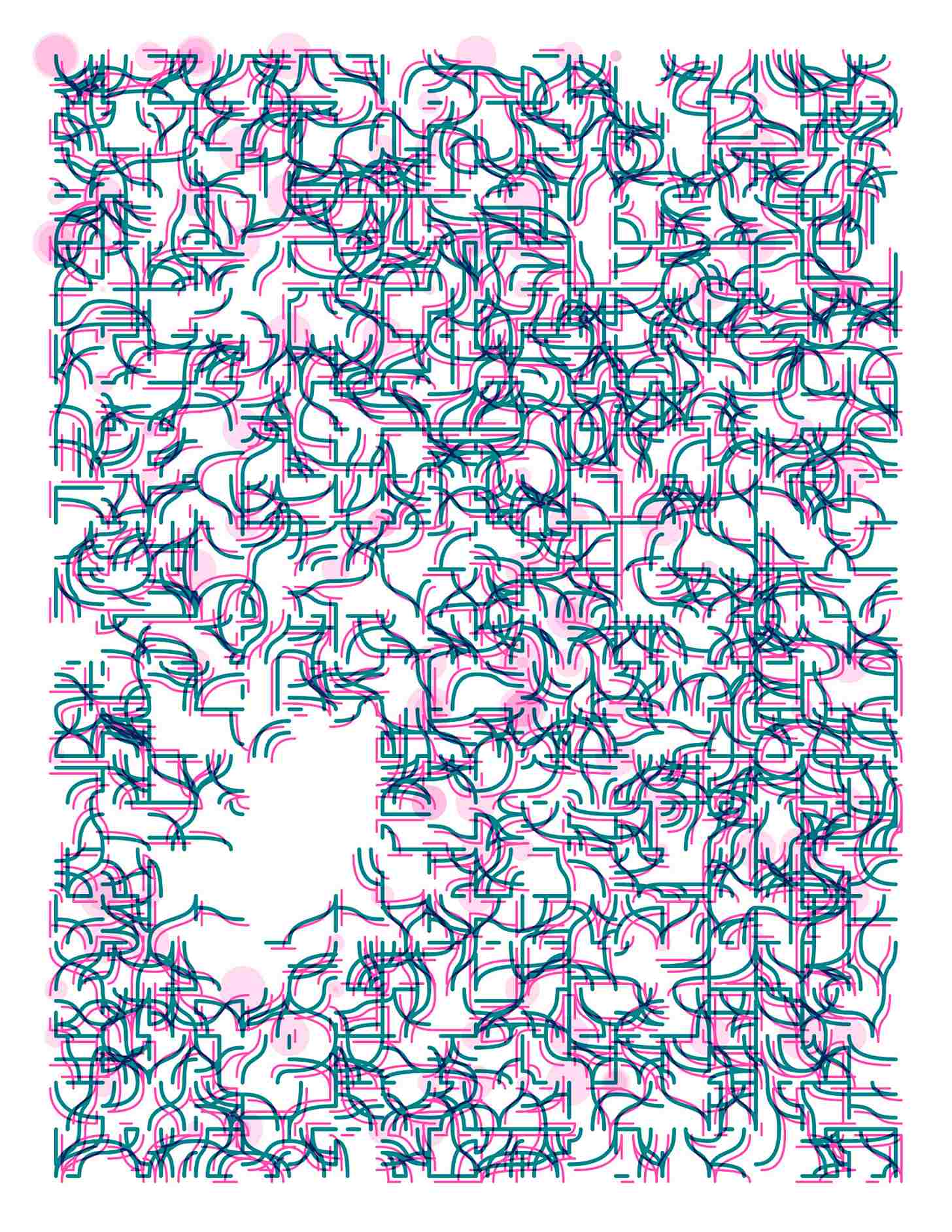

Pen Plots

January 2024 - Ongoing

Vsketch, Python, P5.js, Axidraw

Through the use of pen plotters such as the Axidraw, I explore the intersection of the physical and the computational. I used techniques including edge detection, pseudo-random walk, Truchet tiling, and interleaved recursion to create this ongoing series of pieces.

Ballistae

September 2021 - February 2022

Wood, metal, elastic

This project made use of my knowledge of mechanics and experience with physical media to create full sized wood and metal ballistae. The metal ballista must be staked into the ground and has a range of around 350 feet.