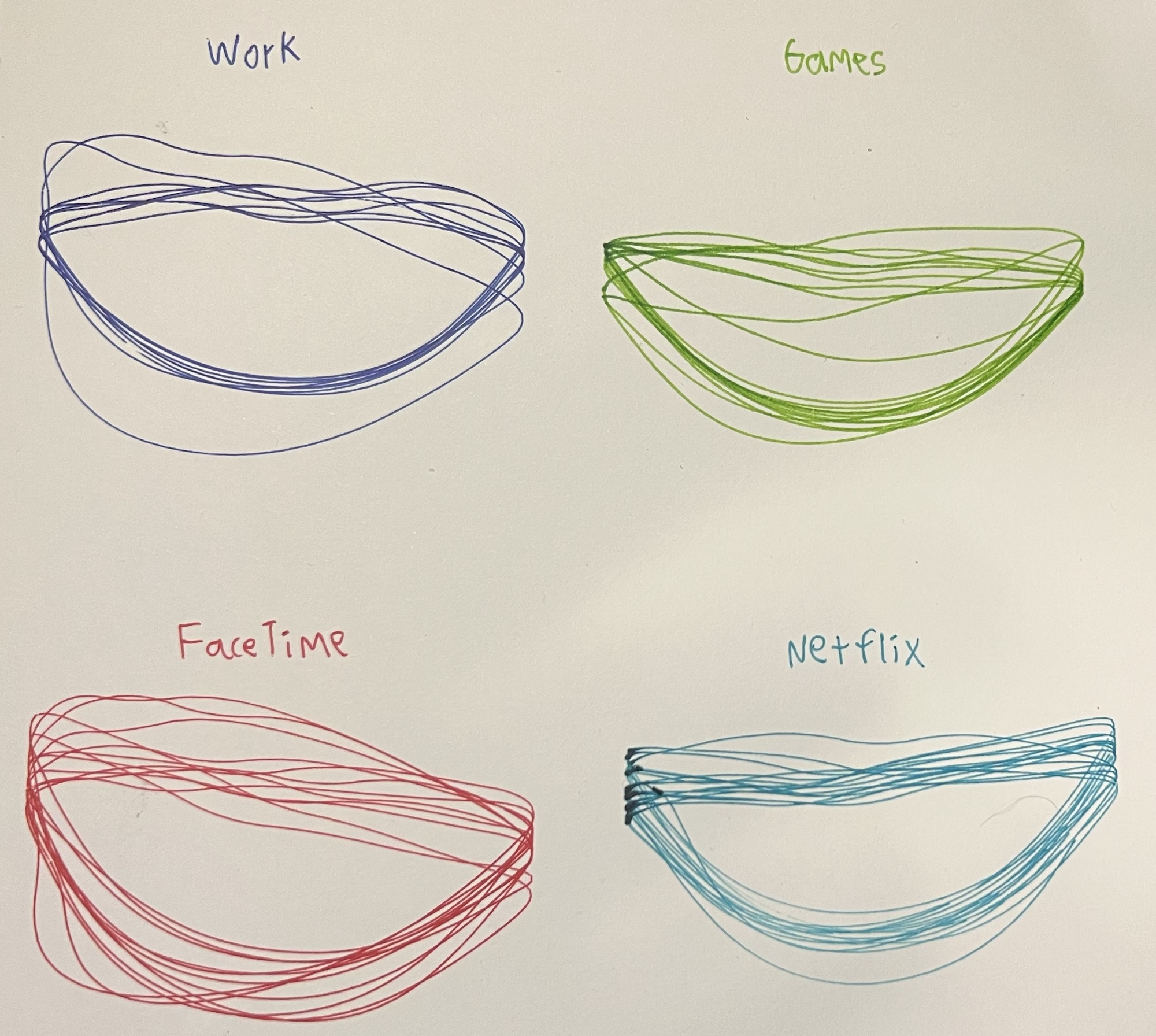

Emotion Data Postcard

This week, in Zach Lieberman’s Drawing++ class at the MIT Media Lab, our assignment was to create a data postcard on a 6″x6″ square of paper.

“Collect a data set over one day (or multiple days) and create a data postcard with a data drawing on the front and key on the back.”

We were assigned groups just for deciding what topic our data postcard should be about, our group decided to do emotion, and then we were left to pursue our own interpretations of that topic independently.

When I started thinking about the prompt, I realized that there are two ways that we convey emotion: consciously and unconsciously. A conscious conveyance of emotion is when you explicitly tell someone how you feel. “I am happy”, “I feel tired”. An unconscious conveyance is how you show your feelings through body language. Seeing someone laugh, seeing someone unable to meet your gaze.

Either one of these would be an effective way to measure emotion for my postcard, but I decided to take the unconscious approach as I had already decided that I wanted to use technology for this assignment, which opened a lot of potential doors for capturing unconscious body language.

The idea that I eventually landed on was to use the shape of my mouth as my source of data for my postcard. I used MediaPipe, a pre-existing face detection model by Google to detect the contour of my lips, and then trace that contour out onto my postcard with a pen plotter.

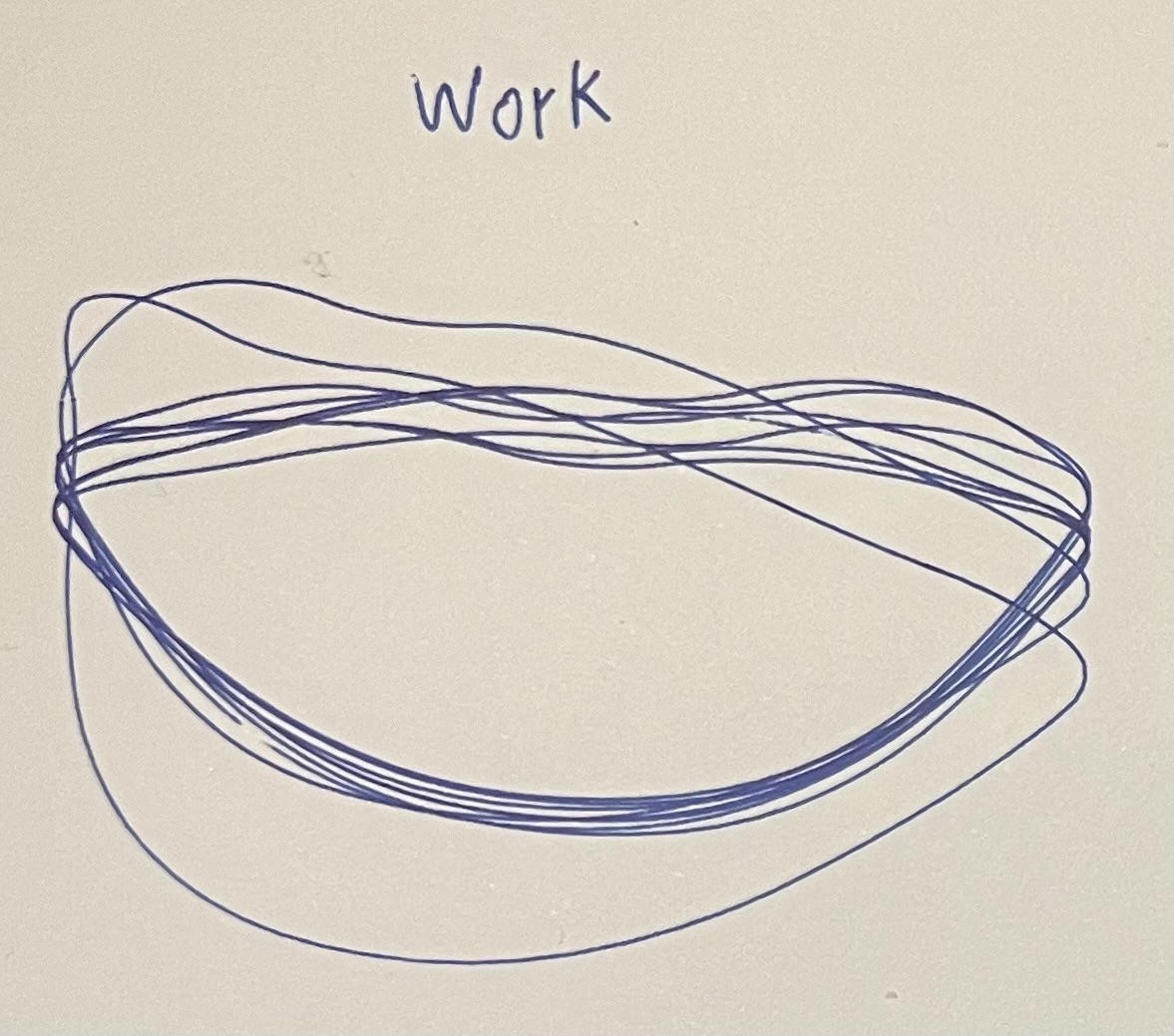

I was working through a coding assignment while I had my lip drawer running. We see that my lips are a bit further apart in this one than the others. When I’m focused, I tend to have my mouth slightly open. You can also see that my expression is generally quite stationary. All but two of the contours are practically identical, again, subconscious body language showing that I’m focused. So what about those other two contours, way out of wack compared to the rest? I’m not quite certain but I’m betting that this is when I was getting frustrated with debugging and was twisting my mouth up into a scowl about some inexplicable behavior of my code.

So overall, when I’m working, we see that my emotional landscape (lipscape!?) is very even and consistent with the occasional dramatic variance.

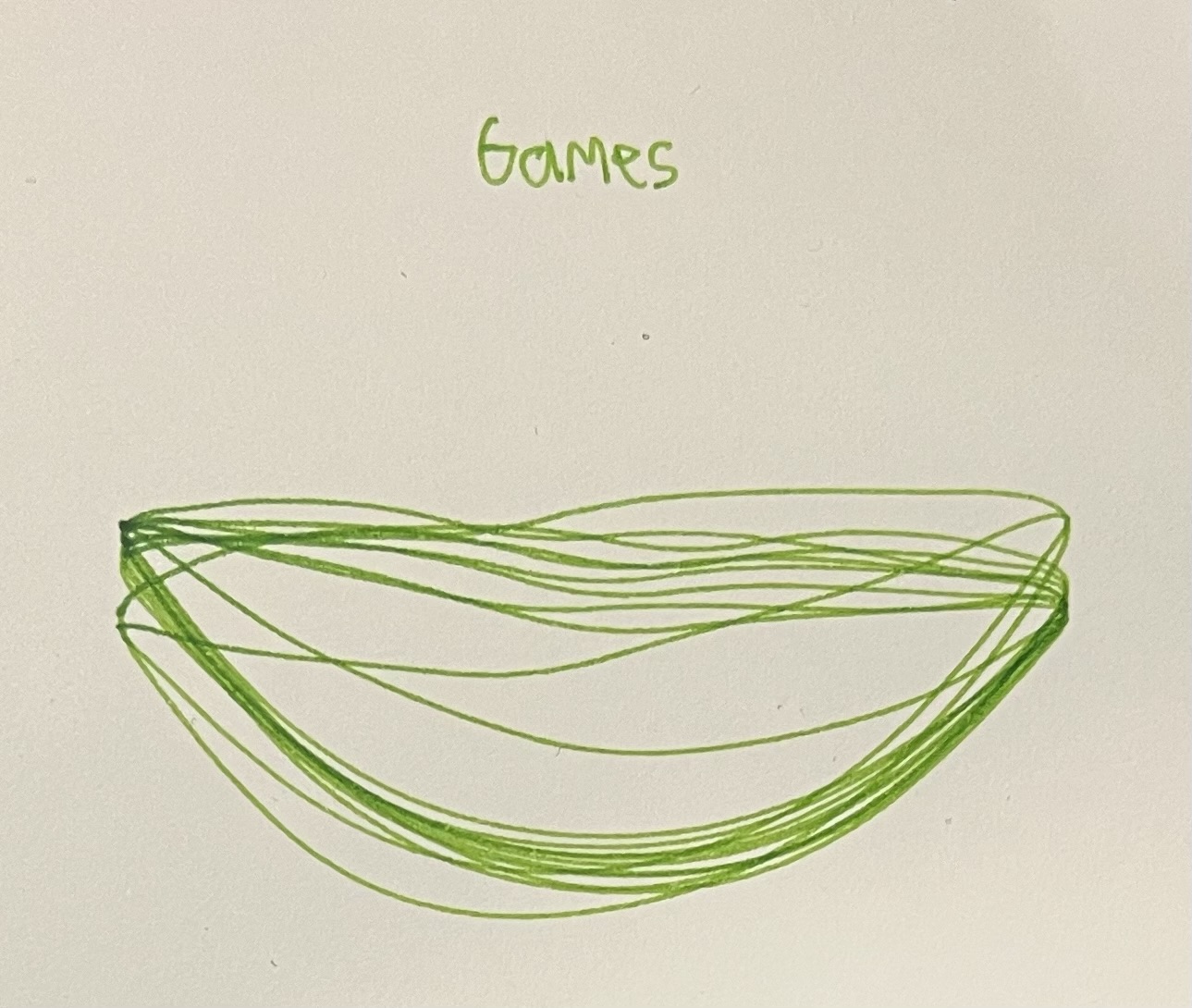

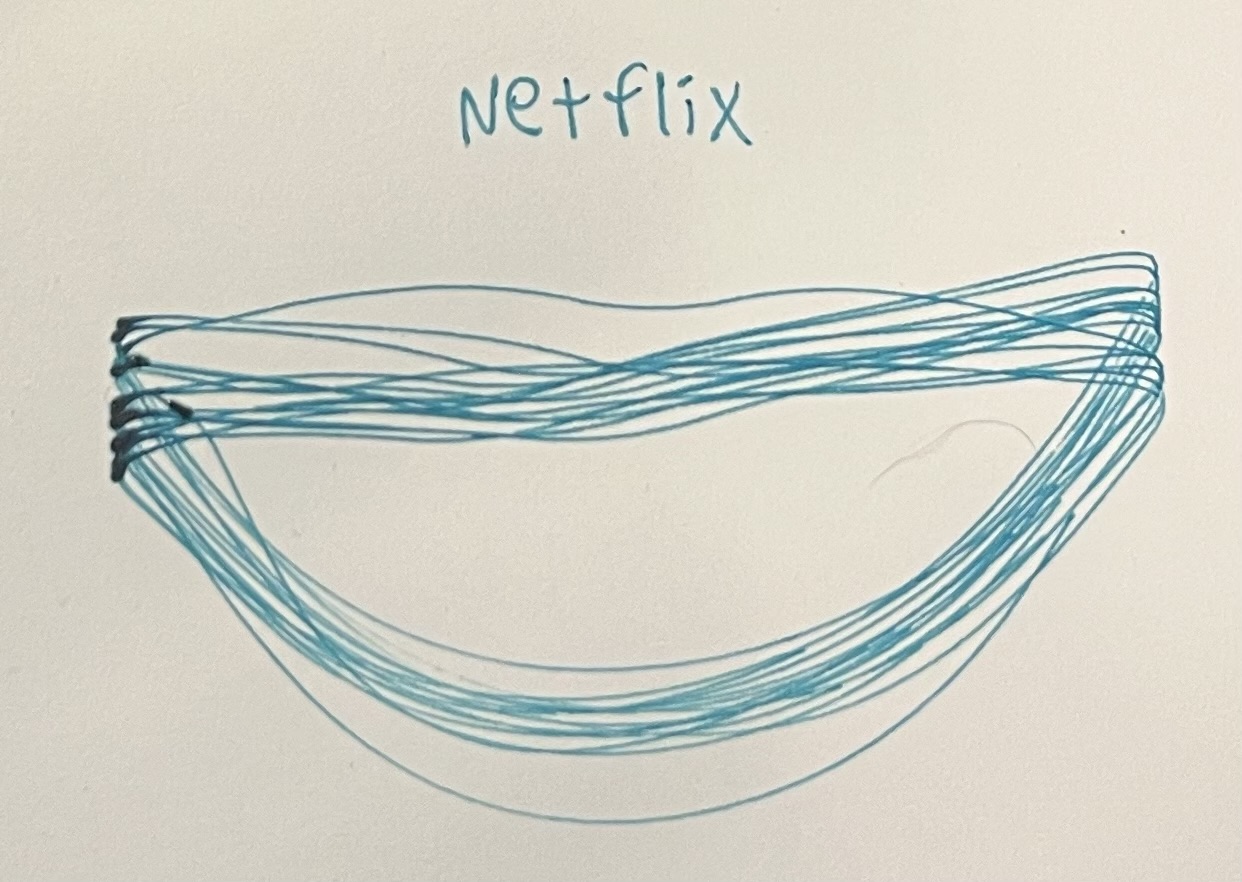

These contours were captured while I was playing a video game with a few friends. My lips are clearly happier here than when I am working, with a bunch of smiles and a few laughs captured by my machine.

These contours were captured while I was playing a video game with a few friends. My lips are clearly happier here than when I am working, with a bunch of smiles and a few laughs captured by my machine.

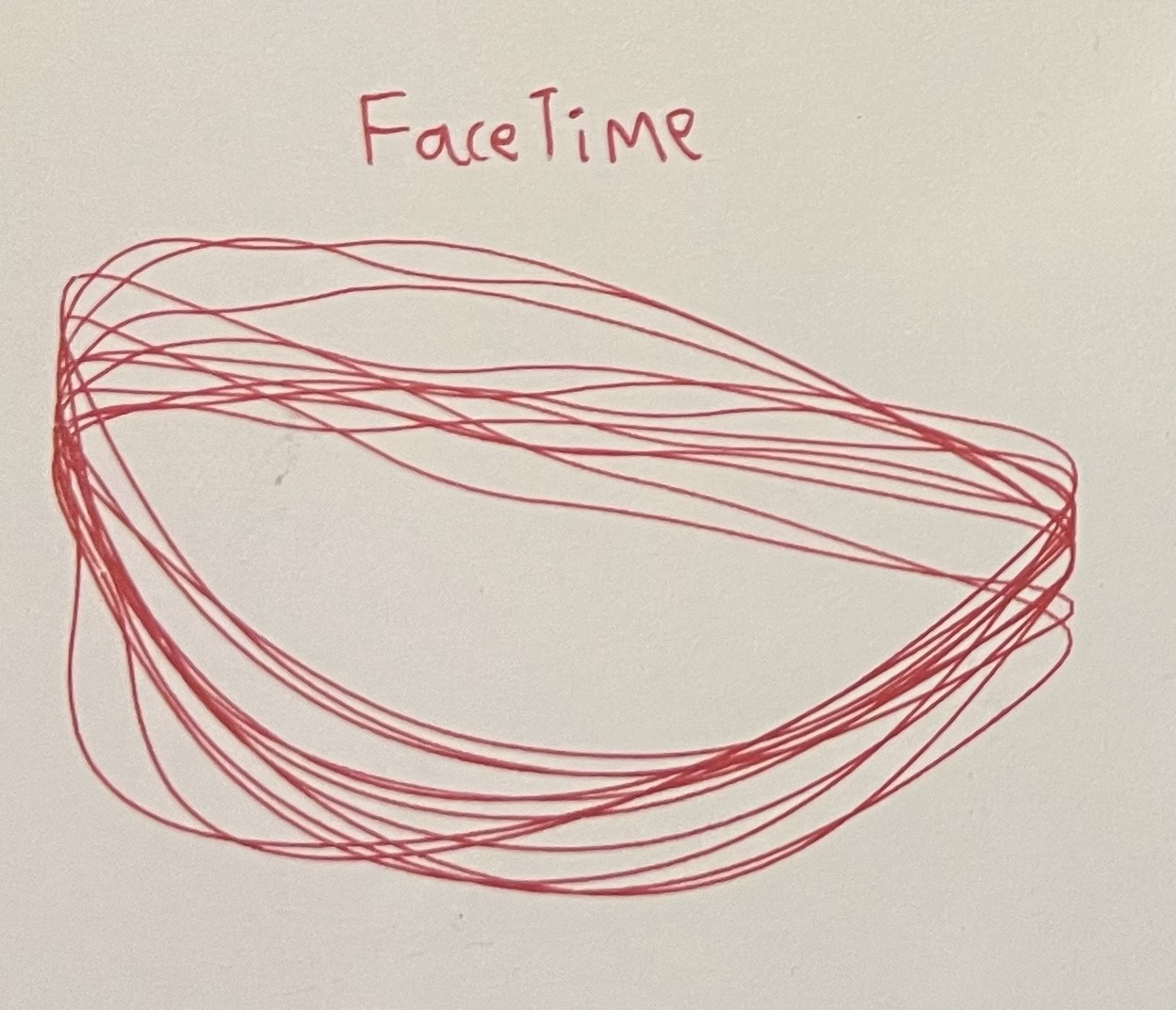

I captured these while I was doing a long FaceTime call with my partner. We talked about everything from the weather to our opinions on neoliberalism, and ranged through an equally diverse set of emotions. My lips are highly dynamic here with both smiles and frowns represented, and its clear that I was mid-sentence in many of the contours.

I captured these while I was doing a long FaceTime call with my partner. We talked about everything from the weather to our opinions on neoliberalism, and ranged through an equally diverse set of emotions. My lips are highly dynamic here with both smiles and frowns represented, and its clear that I was mid-sentence in many of the contours.

And this is kind of the opposite of the FaceTime capture. I took this capture right before going to bed, during my nightly pre-sleep half episode of Game of Thrones, so I was quite worn out. We see that this is the most consistent out of all four captures with my lips remaining in a restful, neutral position throughout.

And this is kind of the opposite of the FaceTime capture. I took this capture right before going to bed, during my nightly pre-sleep half episode of Game of Thrones, so I was quite worn out. We see that this is the most consistent out of all four captures with my lips remaining in a restful, neutral position throughout.

Overall, I think I am capturing something real here. This is far, far from the most precise way to measure emotion or represent data, but a big part of this week is about creating more empathetic, less clinical relationships with our data. (See Data Humanism by Georgia Lupi!)

Find the project on github here!